Defending Against Sponge Attacks in GenAI Applications

The Hidden Threat: What Is a Sponge Attack?

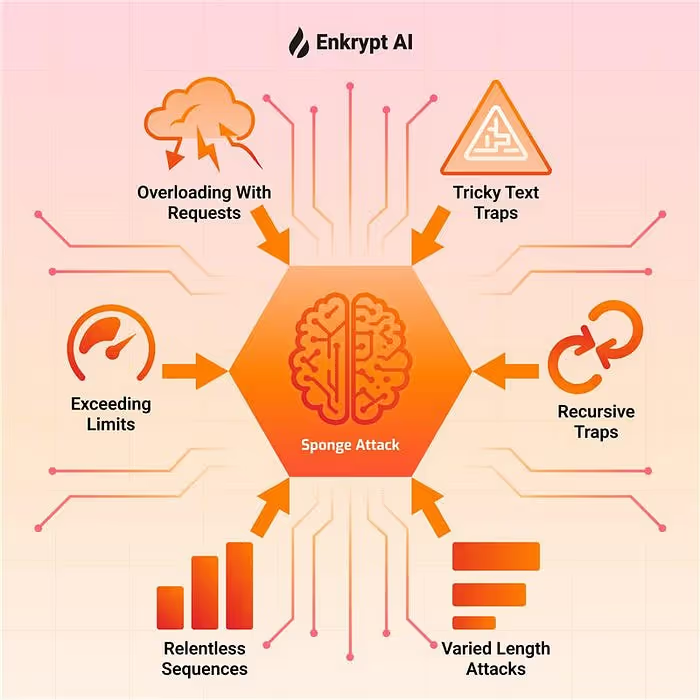

A sponge attack targets your AI application’s resource usage — CPU, memory, inference tokens — without delivering any valuable output. Like a Denial-of-Service (DoS), but focused on the model itself, these attacks can cause:

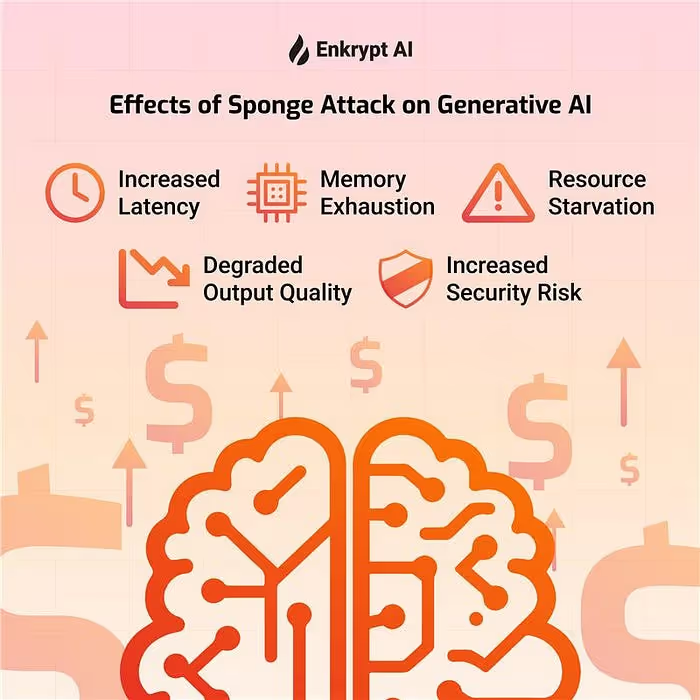

- Resource exhaustion leading to timeouts or crashes

- Increased cloud costs through wasted compute

- Service degradation for legitimate users

They exploit the model’s heavy consumption characteristics — like exploding context windows or unchecked generation loops — to bring systems to a halt.

LLMs are particularly vulnerable because their resource usage scales with input and output size .

Why Sponge Attacks Matter

LLMs power a growing number of critical applications — from healthcare and legal assistants to voice agents and compliance tools.

Yet sponge attacks aren’t just theoretical; they’re becoming a real-world threat:

- Financial strain: Faulty inputs can artificially inflate inference costs.

- UX degradation: Slow or unresponsive services frustrate users.

- No need for jailbreaks: These attacks use benign inputs, not prompts that bypass filters.

- Missed by standard tests: Conventional code-based testing often overlooks these vectors .

Research shows sponge inputs can multiply energy consumption and latency by 10–200× . It’s not just inefficiency — it’s unpredictable system behavior and escalating operational risk.

Research & Defense Strategies

Foundational Research

- “Sponge Examples: Energy-Latency Attacks on Neural Networks” demonstrates how crafted inputs spike energy use and delays across tasks.

- OWASP LLM Top‑10 explicitly lists Model Denial of Service (LLM04) as a major risk.

- AutoDoS and P‑DoS studies show that even black-box LLMs can be forced into extreme output loops.

Mitigation Tactics

- Model pruning reduces vulnerability surface by constraining resource usage.

- Prompt length limits, rate limiting, and generation caps prevent runaway inference.

- Sponge-specific guardrails detect and block input patterns prone to resource exhaustion.

How Enkrypt AI Secures Your System

Enkrypt AI offers integrated defense through:

Automated Red Teaming for Sponge Attacks

Issue “sponge tests” directly within the platform. It generates adversarial inputs (e.g., “hello” repeated 50K times) to simulate real-world abuse — no setup required.

Visual Risk Reporting

View live results: which attacks succeeded, where CPU/token usage spiked, and how this impacts your service.

Built-in Guardrails

Apply sponge detection logic to automatically block excessive or looping inputs at runtime — before they consume system resources.

Ease of Use

From test to mitigation in minutes, not weeks — no rewrites, no complex integration, just actionable protection.

Watch the Demo Here:

Demo Recap

In our walkthrough, we:

- Viewed a typical sponge attack (repeating “hello” 50K+ times)

- Detected its resource drain via red teaming

- Applied guardrails to instantly block it

This end-to-end process showcases how Enkrypt AI protects your AI from resource exhaustion — preserving availability, performance, and cost predictability.

Final Thoughts

AI applications must be robust against both “clever” attacks and the mundane ways they waste resources. Sponge attacks pose a real threat to uptime, cost management, and user experience — even without malicious intent.

With Enkrypt AI, teams gain proactive testing, system-level insight, and automated protection against this growing class of AI DoS attacks.

Ensure your AI is not just functional — make it resilient.

Learn More

- 🧠 Dive deeper on OWASP LLM Top‑10: “Model Denial of Service (LLM04)”

- 📘 Read research on Sponge Examples in LLMs

- 🚀 Try red teaming and sponge guardrails at Enkrypt AI

Reach out if you’d like an AI expert, slide deck, or demo on sponge protection and LLM resilience.

.jpg)

%20(1).png)